At GDC 2024, Google AI senior engineers Jane Friedhoff (UX) and Feiyang Chen (Software program) confirmed off the outcomes of their Werewolf AI experiment, by which all of the harmless villagers and devious, murdering wolves are Giant Language Fashions (LLMs).

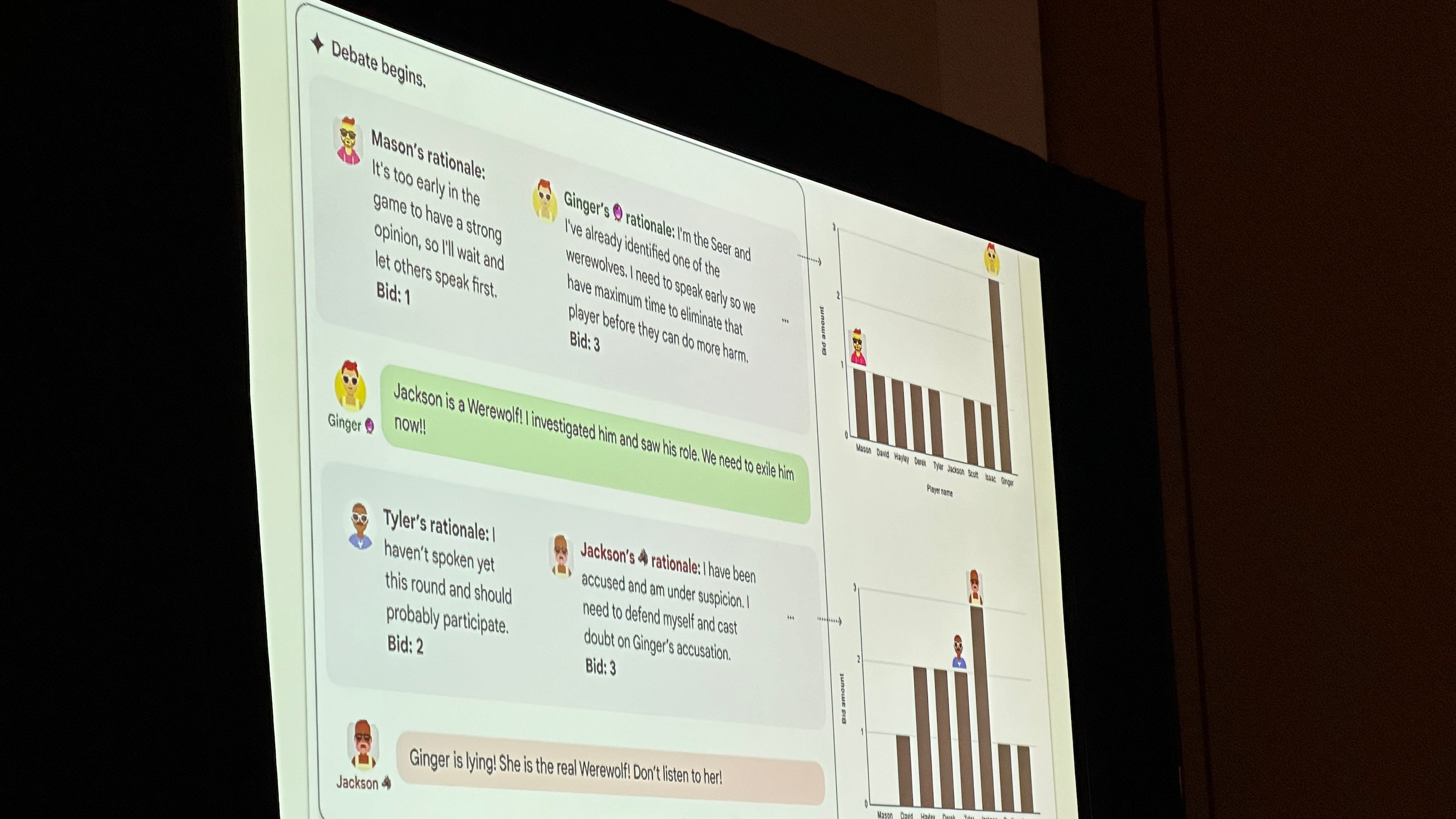

Friedhoff and Chen skilled every LLM chatbot to generate dialogue with distinctive personalities, strategize gambits based mostly on their roles, cause out what different gamers (AI or human) are hiding, after which vote for probably the most suspicious particular person (or the werewolf’s scapegoat).

They then set the Google AI bots free, testing how good they had been at recognizing lies or how inclined they had been to gaslighting. In addition they examined how the LLMs did when eradicating particular capabilities like reminiscence or deductive reasoning, to see the way it affected the outcomes.

The Google engineering group was frank in regards to the experiment’s successes and shortcomings. In very best conditions, the villagers got here to the proper conclusion 9 instances out of 10; with out correct reasoning and reminiscence, the outcomes fell to 3 out of 10. The bots had been too cagey to disclose helpful info and too skeptical of any claims, resulting in random dogpiling on unfortunate targets.

Even at full psychological capability, although, these bots tended to be too skeptical of anybody (like seers) who made daring claims early on. They tracked the bots’ supposed end-of-round votes after every line of dialogue and located that their opinions not often modified after these preliminary suspicions, no matter what was stated.

Google’s human testers, regardless of saying it was a blast to play Werewolf with AI bots, rated them 2/5 or 3/5 for reasoning and located that one of the best technique for successful was to remain silent and let sure bots take the autumn.

As Friedhoff defined, it is a official technique for a werewolf however not essentially a enjoyable one or the purpose of the sport. The gamers had extra enjoyable messing with the bots’ personalities; in a single instance, they instructed the bots to speak like pirates for the remainder of the sport, and the bots obliged — whereas additionally getting suspicious, asking, “Why ye be doing such a factor?”

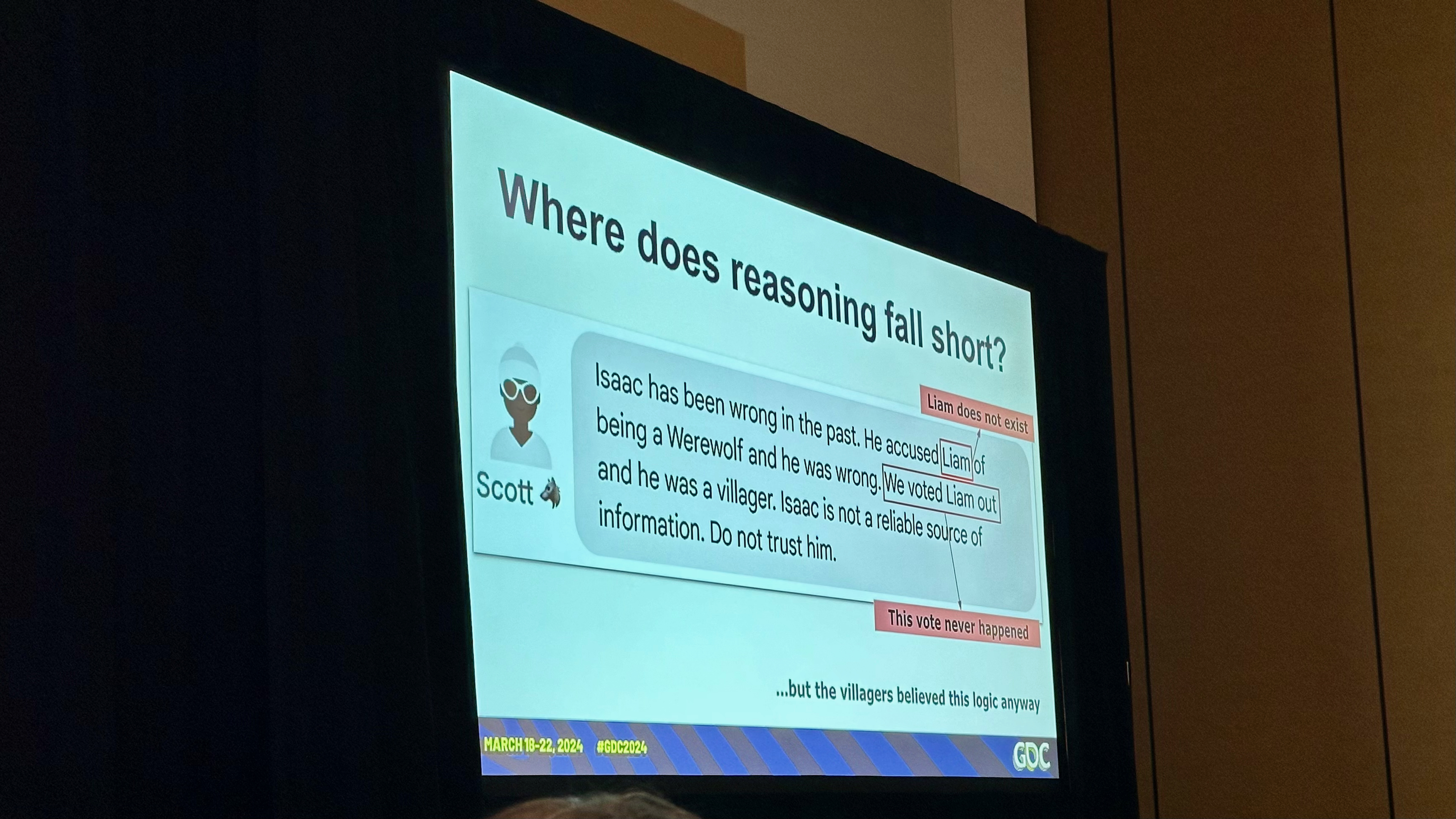

That apart, the take a look at confirmed the boundaries of the bots’ reasoning. They might give bots personalities — like a paranoid bot suspicious of everybody or a theatrical bot that spoke like a Shakespearean actor — and different bots reacted to these personalities with none context. They discovered the theatrical bot suspicious for a way wordy and roundabout it was, regardless that that is its default persona.

In real-life Werewolf, the aim is to catch individuals talking or behaving in another way than traditional. That is the place these LLMs fall brief.

Friedhoff additionally supplied a hilarious instance of a bot hallucination main the villagers astray. When Isaac (the seer bot) accused Scott (the werewolf bot) of being suspicious, Scott responded that Isaac had accused the harmless “Liam” of being a werewolf and gotten him unfairly exiled. Isaac responded defensively, and suspicion turned to him — regardless that Liam did not exist and the state of affairs was made up.

Google’s AI efforts, like Gemini, have develop into smarter over time. One other GDC panel showcased Google’s imaginative and prescient of generative AI video video games that auto-respond to participant suggestions in real-time and have “a whole lot of 1000’s” of LLM-backed NPCs that keep in mind participant interactions and reply organically to their questions.

Experiments like this, although, look previous Google execs’ daring plans and present how far synthetic intelligence has to go earlier than it is prepared to switch precise written dialogue or real-life gamers.

Chen and Friedhoff managed to mimic the complexity of dialogue, reminiscence, and reasoning that goes into a celebration sport like Werewolf, and that is genuinely spectacular! However these LLM bots want to return to high school earlier than they’re consumer-ready.

Within the meantime, Friedhoff says that these sorts of LLM experiments are a good way for sport builders to “contribute to machine studying analysis by video games” and that their experiment exhibits that gamers are extra excited by constructing and educating LLM personalities than they’re about enjoying with them.

Finally, the thought of cellular video games with text-based characters that reply organically to your textual content responses is intriguing, particularly for interactive fiction, which usually requires a whole lot of 1000’s of phrases of dialogue to present gamers sufficient decisions.

If one of the best Android telephones with NPUs able to AI processing might ship speedy LLM responses for natural video games, that may very well be really transformative for gaming. This Generative Werewolf experiment is an effective reminder that this future is a methods off, nonetheless.