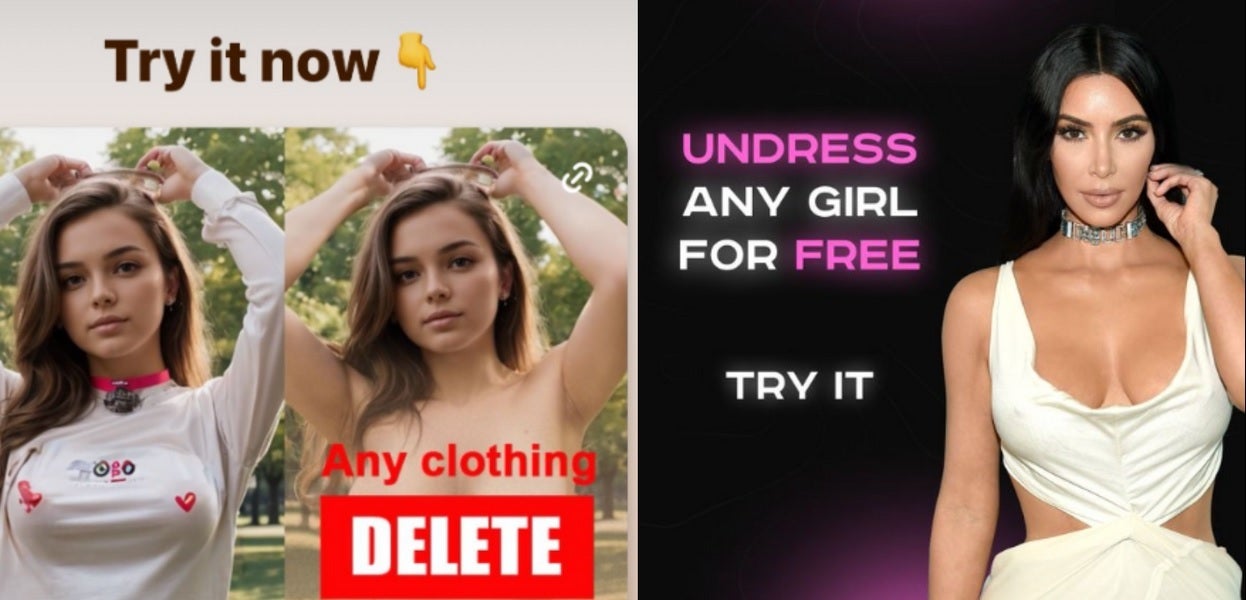

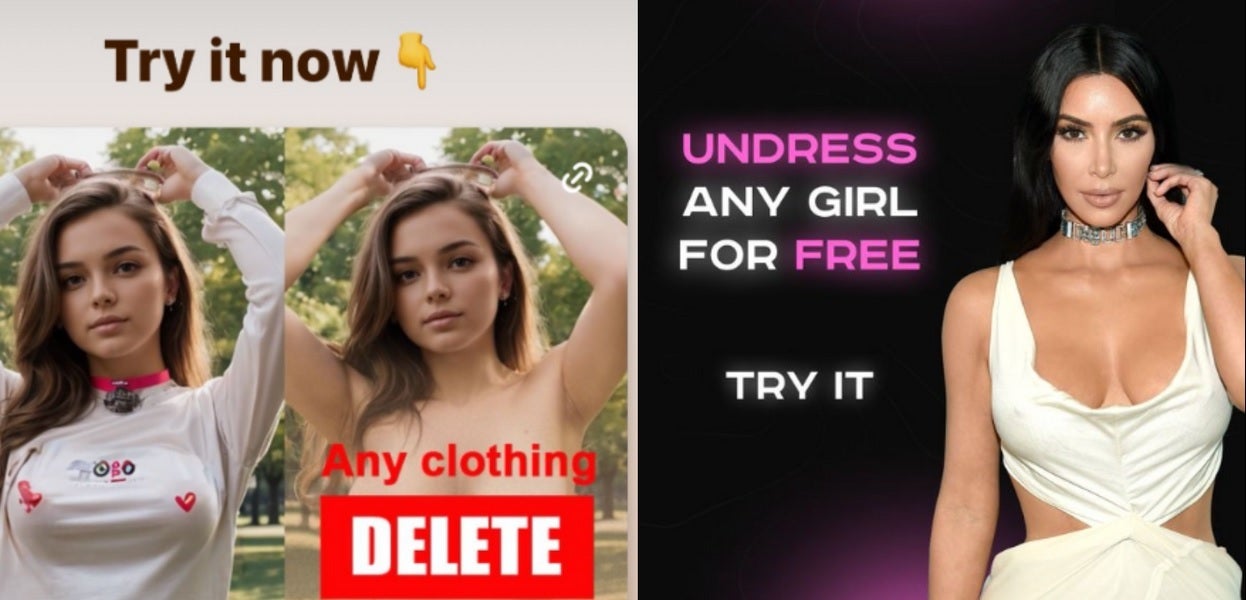

These apps have been described by the App Retailer as “artwork mills” though what they actually did was create “nonconsensual nude photos.” Such photos do probably not present what these girls actually appear to be with out garments and depend on AI to create what seems to be the nude physique underneath the clothes. Nonetheless, these footage may nonetheless be used to embarrass or humiliate girls and blackmail them.

404 Media stated that Apple eliminated the apps however not earlier than it gave the tech large hyperlinks to the apps and their advertisements. The report steered that Apple couldn’t appear to search out the offending apps itself. Whereas a few of these apps supplied the AI “undress” characteristic, others carried out face swaps on grownup photos.

Apps faraway from the App Retailer by Apple have been promoting on Instagram and grownup websites about their app’s skill to create porn

A few of these apps began showing within the App Retailer way back to 2022 and appeared harmless to each Apple and Google because the apps have been listed within the App Retailer and Play Retailer respectively. Apparently unbeknown to the tech corporations, the builders behind these apps have been promoting their porn capabilities on grownup websites. As an alternative of instantly taking down these apps, Apple and Google allowed them to stay of their app storefronts so long as they stopped promoting on porn websites.

Regardless of Apple and Google’s demand, one of many apps did not cease promoting on grownup websites till this 12 months when Google pulled the app from the Play Retailer.